You have /5 articles left.

Sign up for a free account or log in.

The word “crisis” is often used to describe the peer-review system, not only in terms of quality of reviews but also quantity. To hear some academics tell it, fielding peer-review requests is a nearly full-time job. But preliminary research on the input-output balance in peer review suggests there is no real crisis, at least as far as quantity is concerned. That is, the professors who are writing the most get asked to review the most, meaning the system is in balance -- sort of.

“Most academics get few peer-review requests and perform most of them,” reads a new write-up of the research shared with Inside Higher Ed. “Reviewing is strongly correlated with academic productivity -- research-productive scholars get more requests and perform more reviews. However, the ratio of peer reviews performed to article submissions is also lower for more productive scholars.”

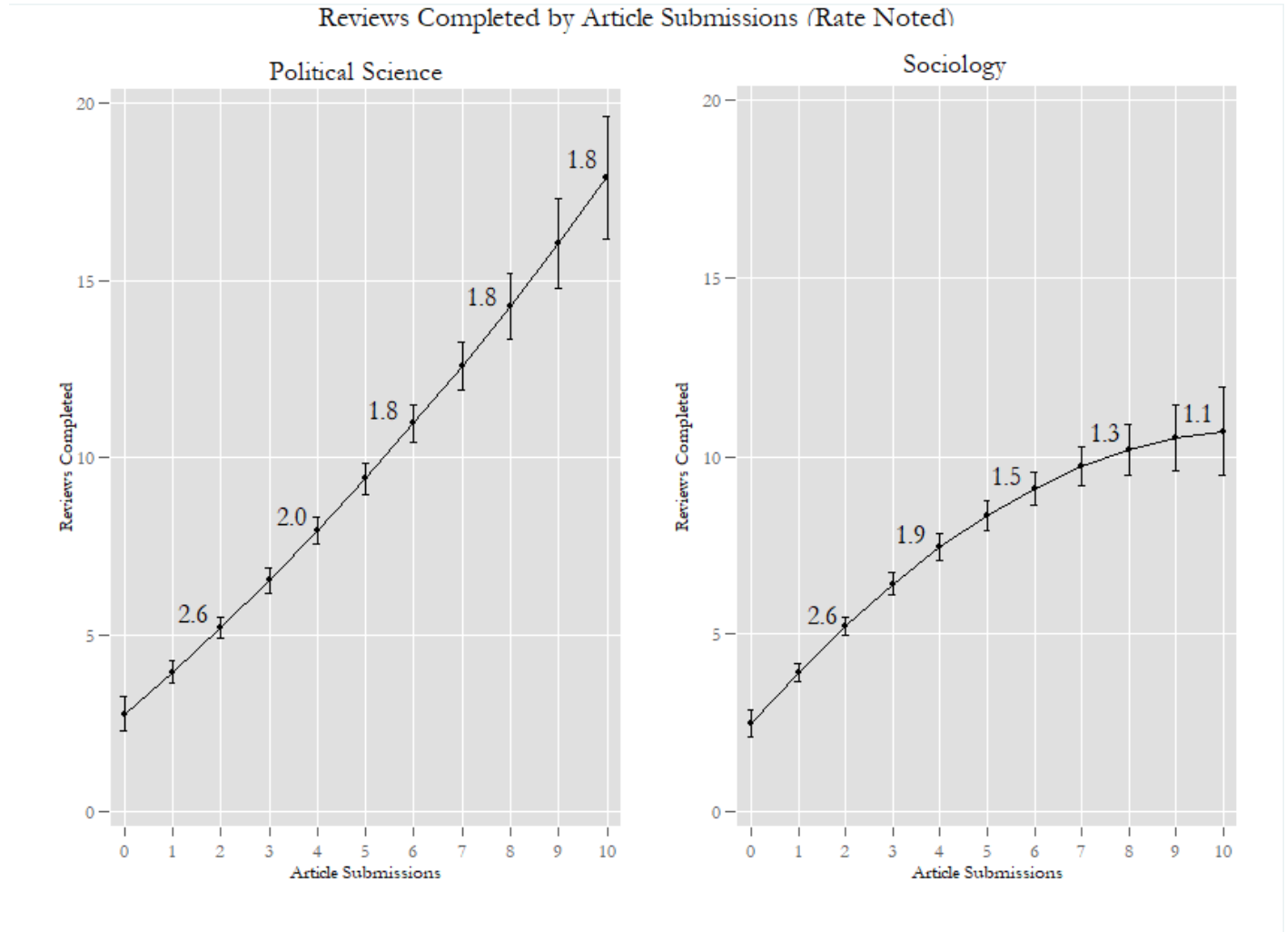

The authors of the paper disagree as to whether peer review should be more “democratic,” encompassing a greater share of academics, or whether professors who feel overtaxed by the peer-review system just need to adopt a more obligation-oriented view of it. Specifically, the authors estimate that academics -- at least social scientists -- should be reviewing approximately three papers for each one they submit.

“The data point to the natural human tendency to overestimate the cost of things, negatively, without really thinking of paying it forward into a system,” said co-author Amy Erica Smith, an assistant professor of political science at Iowa State University. “If somebody sends out, say, seven articles in a year, then that would imply that you need to do 18 or so journal reviews in a year to make up for those seven manuscripts.” The figure accounts for the fact that each submission likely will be read by multiple reviewers, give or take initial rejections by journal editors and revisions.

Beyond human tendencies, Smith said, academics aren’t “socialized to think of doing reviews and doing research as part of the same system we benefit from. We think of them as two different things.”

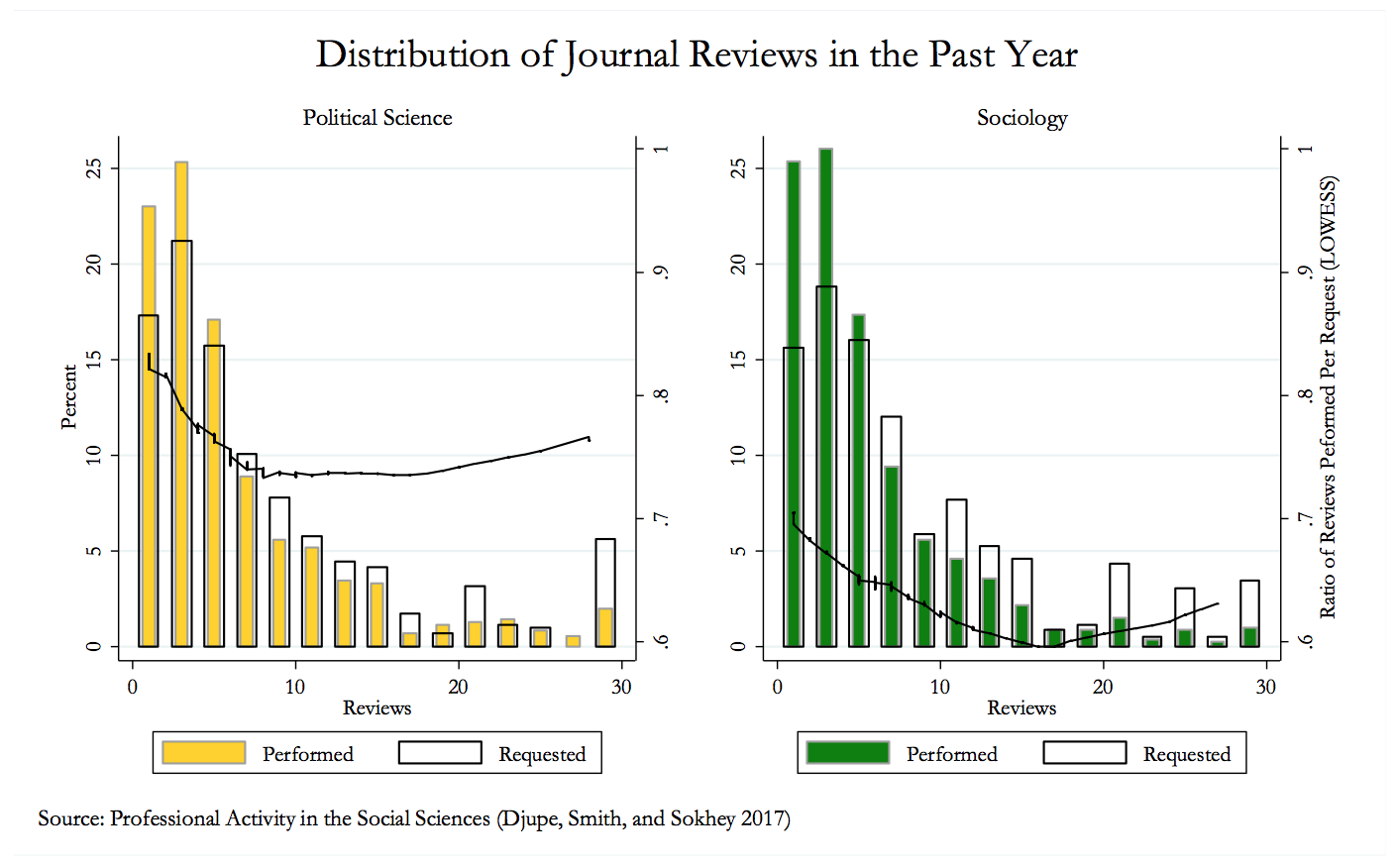

Those conclusions are based on an online survey conducted earlier this year of 900 faculty members in political science departments nationwide, and on a second, similar survey of 958 sociology professors. The median political scientist and median sociologist in each survey reported receiving five review requests per year. The median political scientist completed four of them, while the median sociologist completed three.

Some social scientists do receive many more requests: in both political science and in sociology, the top 10 percent of those surveyed said they received 20 or more requests per year. Those professors also tended to be less likely than their other peers to accept all or most to them. Yet some at the high end of the request curve are prone to accept even more than reviewers in moderate demand.

What drives this tremendous inequality in peer reviewing, the authors ask. Why do a small proportion of potential reviewers end up with so many requests? Their answer is academic productivity. People who submit and publish more work get asked to review more. In sociology, according to the survey, 18 percent of peer reviewers do half of peer reviews. In political science, it’s 16 percent of reviewers doing half the work.

“The fact that the most visible -- and probably vocal -- individuals in the two disciplines get the most review requests certainly exacerbates the perception of a crisis,” wrote Smith and her co-authors, Paul Djupe, an associate professor of political science at Denison University, and Anand Edward Sokhey, associate professor of political science at the University of Colorado at Boulder.

Djupe in an interview had some succinct diagnoses for why the quantity problem seems to persist: visible, in-demand “squeaky wheels” making lots of noise -- sometimes in the form of a “humble brag,” as in, “‘I do so many, but I can’t imagine saying no.’”

Some 25 percent of sociologists surveyed submitted no research in the last year but performed reviews anyway, as did 23 percent of political scientists. The authors say that these academics could be paying back accumulated past peer review "debts," but that the review system nevertheless depends on their "generosity." Those who had submitted just one article in the past year had a “credit” of between 1.1 and 1.9 reviews in sociology and between one and 1.7 reviews in political science.

By contrast, the authors note, those who submitted a lot of work to journals tended to be “in the hole.” Depending on modeling assumptions, researchers ran out of review “credits” when they submitted between two and five articles, in both fields.

The most productive political science scholars, however, were more generous than their moderate-reviewing peers. Sociologists who wrote the most owed the most number of reviews, however.

Imagining a more “equitable distribution of reviewing,” Djupe and Smith exchanged friendly arguments. Smith said she worried about overzealous efforts to democratize reviewing, in that those who don’t write much and therefore don’t get asked to review much might have jobs that require a disproportionate emphasis on teaching versus research. So asking them to do more peer reviewing would place additional burdens on them.

Djupe, meanwhile, favored democratization, in part because it could improve social science by reducing the role of “gatekeepers” and introducing new voices to the field.

The recent survey isn’t the first to question fairness in the peer-review system. In a co-written 2010 paper, Jeremy Fox, a professor of ecology at the University of Calgary, even proposed a form of currency -- the “PubCred” -- by which professors who submit reviews can “earn” the ability to submit articles.

Fox said Monday that the new data didn’t surprise him, and that he’d observed similar input-output patterns within ecology. The big, open question, he said, is “what exactly would constitute a crisis in the peer-review system?” Many journals are finding that they have to invite more reviewers to get at least two to agree to review a given manuscript, for example, he said. In response, journals are not only inviting more reviewers per submission, they're also starting to broaden their reviewer bases. That means including more postdoctoral fellows or senior Ph.D. candidates, he said. And more journal editors are rejecting more submissions they consider to be unpublishable at the desk, with no reviews at all.

Does all that constitute a crisis, Fox asked? “I personally don't think it does,” he said, noting his opinion had changed somewhat since 2010.

Andrew Gelman, a professor of political science and statistics at Columbia University, has in the past argued against peer review as a wasteful system and instead proposed postpublication review. He said via email Monday that he still opposes traditional peer review in that “every paper, no matter how crappy, gets reviewed multiple times (for example, consider a useless paper that gets three reviews and is rejected from journal A, then gets three reviews and is rejected from journal B, then gets three reviews and is accepted in journal C).” Traditional peer review’s only virtue, he added, is that it would be hard to recreate any other system that attracts the unpaid labor of thousands of people.

By contrast, Gelman said, even “the most important papers don't get traditional peer review after publication. Postpublication review makes more sense in that the reviewer resources are focused on the most important or talked-about papers.”

Philip Cohen, a professor of sociology at the University of Maryland at College Park First, directs SocArXiv, an open-access archive of social science research that seeks to improve the transparency and efficiency of peer review. He didn’t propose ending prepublication peer review but suggested serious changes, including not discarding reviews when a paper is rejected. That way, the review process doesn’t have to start over again when an author submits to a new journal. “Why can’t reviews travel with the paper, or even better, be posted on a central repository for editors and other reviewers to consult?” he said.

Cohen further proposed that all reviews be made public, or at least that the option be available. Reviews are scholarly work and should therefore be part of the scholarly record, he said. And making them public would not only help readers get credit for their work but might also push them to write reviews of a higher quality.

“Writing reviews is work we do out of professional obligation and interest in the quality of scholarship,” Cohen said. “But we get basically nothing for it. Being a good reviewer, in quality and quantity, is a tremendous service that goes unrecognized.”

Smith said she didn’t see a reason that the broad arguments of her paper wouldn’t apply to other disciplines. Similar studies in other disciplines have already yielded parallel results. A 2016 paper, for example, found that 20 percent of life scientists completed between 69 percent and 94 percent of a reviews. While the paper called these academics “peer-review heroes,” it also noted that the overall supply of life scientists was rising faster than the demand for reviews. (Data in that study were taken from Medline, bibliographic database for the life sciences.)

At the very least, Smith said, the social sciences could stand to somehow recognize the work of peer review.

Crediting the initiative Publons for attempting to do just that, the new write-up says, “We can only begin to make headway on problems with peer review from a position that is well informed by accurate data. Documenting and rewarding peer review is a great step in that direction.”