You have /5 articles left.

Sign up for a free account or log in.

Harvard University

Harvard University

Research suggests that academic jobs in a variety of fields overwhelming go to graduates of elite academic departments, and that those graduates don’t necessarily end up being the most productive researchers. A new study argues that academic publishing -- at a least in the humanities -- is guilty of the same bias, choosing papers based on where they come from over what they say.

“Our goal is to begin to shed light on the academic publication system with a particular emphasis on questions of institutional and intellectual inequality,” reads a write-up of the research by co-author Andrew Piper, professor and William Dawson Scholar of German and European Literature at McGill University. “[There] is a strong bias towards a few elite institutions who exercise outsized influence not only on who gets tenure-track jobs but also in who gets published and where. … As academics we need to better understand both the past and present of our publication system and have open conversations about what a more egalitarian and institutionally diverse intellectual system might look like.”

The study, “Publication, Power and Patronage: On Inequality and Academic Publishing,” is forthcoming in Critical Inquiry. Piper and his research partner, Chad Wellmon, associate professor of Germanic languages and literatures at the University of Virginia, attempt to make their case quantitatively and conceptually. As far as data go, they analyzed a hand-curated set regarding 45 years’ worth of articles (5,664 total) in four top humanities journals -- Critical Inquiry, New Literary History, PMLA and Representations. They took into account each author’s college or university affiliation at time of publication, Ph.D.-granting institution and gender. Some 3,547 authors were represented, from 344 Ph.D. institutions and 721 authorial institutions.

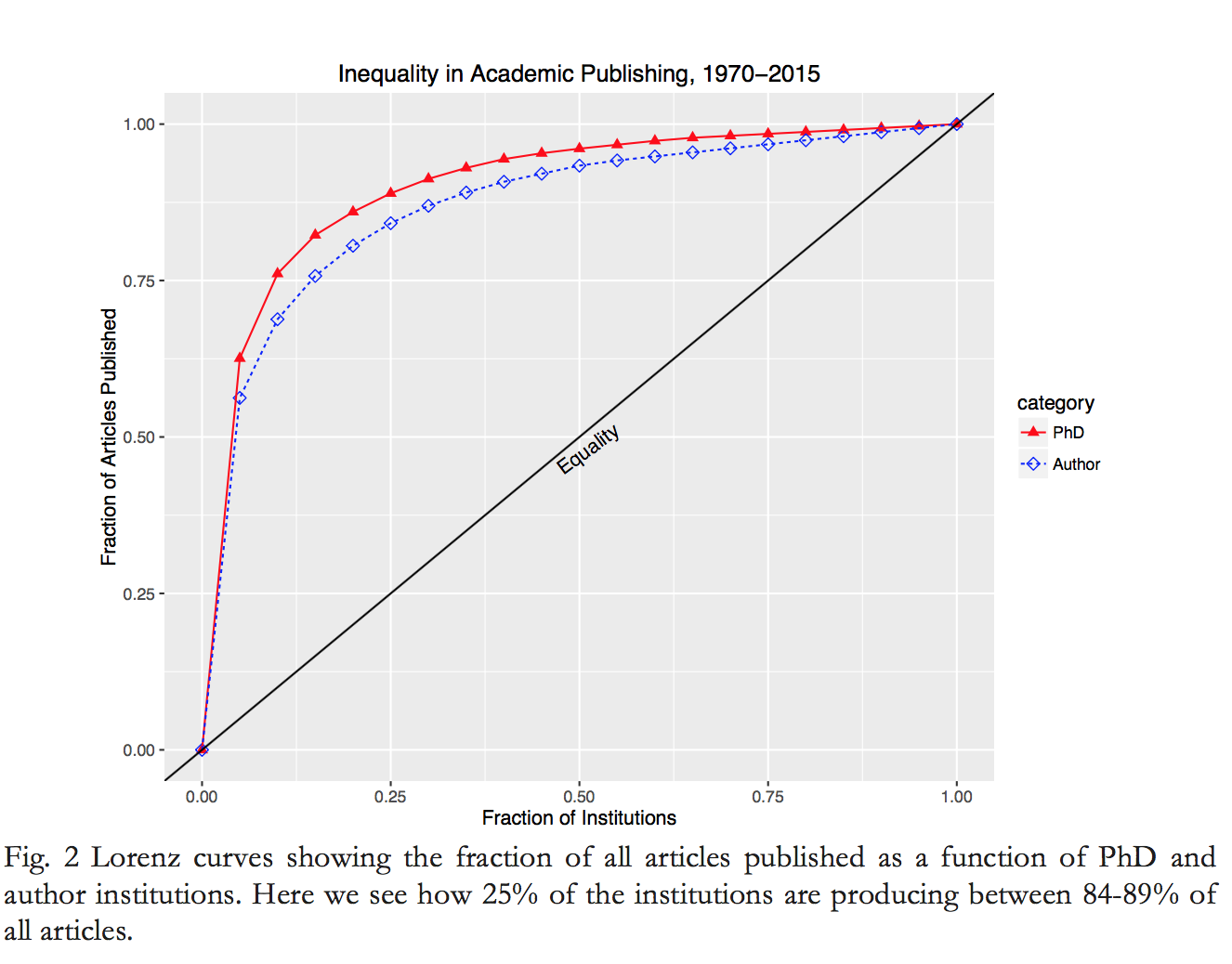

Regarding Ph.D.-granting institutions, the top 20 percent of universities represented in the sample account for 86 percent of the articles, and the top 10 universities represented alone account for 51 percent: Ph.D.s from Columbia, Cornell, Johns Hopkins, Harvard, Princeton, Stanford and Yale Universities and the Universities of Chicago, Oxford, and California, Berkeley, wrote 2,866 of 5,664 articles.

Authors with Ph.D.s from just Yale and Harvard account for 20 percent of all articles.

Authors with Ph.D.s from just Yale and Harvard account for 20 percent of all articles.

The authors say academic publishing has long favored prestige, but note that considering just the past 25 years of publishing data, from 1990 to the present, doesn’t even significantly change their results.

Similarly, they say, institutional affiliation at time of publication presents only a slightly different story. While the top 20 percent of institutions still account for over 80 percent of all articles, for example, the top 10 institutions now account for 31 percent of articles.

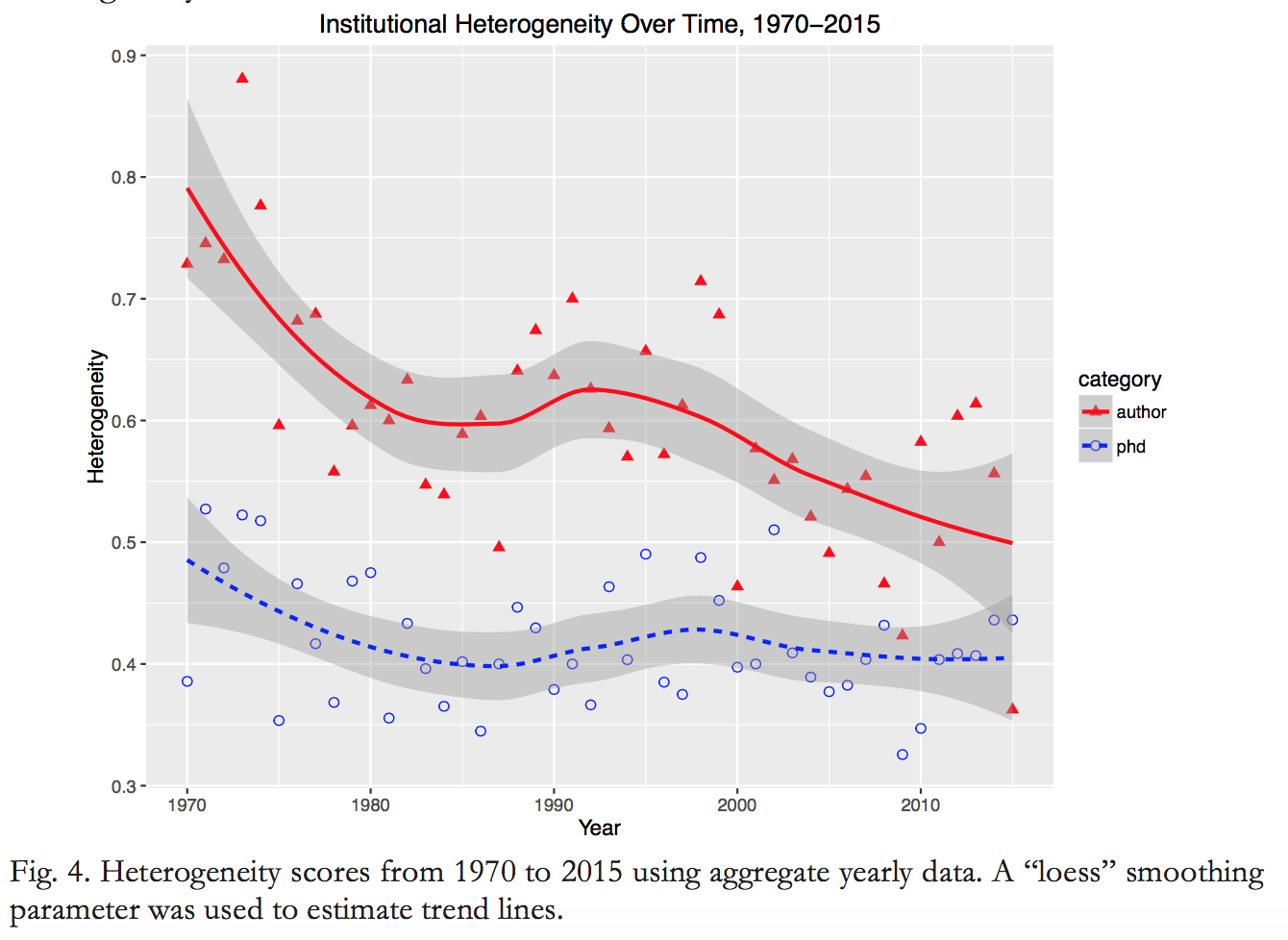

Piper and Wellmon also calculated heterogeneity “scores” for journals, determining what kind of institutional variety they included. PMLA, the journal of the Modern Language Association, had the highest median heterogeneity score for authors’ college or university affiliation at 83 percent, “meaning just under 20 percent of articles published in a given year are generally by authors from the same institutions.” New Literary History was close, at 82 percent. Representations and Critical Inquiry, meanwhile, had lower scores: 71 percent and 69 percent, respectively. The authors attribute the difference between the two sets of journals to PMLA and New Literary History maintaining a system of blind peer-review throughout the process.

Could anything but bias be contributing to the results?

Could anything but bias be contributing to the results?

The paper notes that the rankings-conscious hiring patterns paralleling their findings also contribute to them -- but only in part.

“Since so few institutions train such an outsized proportion of those graduate students who get jobs, it makes sense that we would see something similar when it comes to publication,” the paper says. “And yet the bias in publication towards a smaller number of institutions is actually higher for the prestigious journals we studied than it is in the field of hiring.”

What about program size? The authors did see some correlation between the size of the graduate program, calculated as the number of dissertations produced per year in the field of “literature,” and the number of publications. Yet, again, they say it doesn’t explain away the disparity.

“For data since 1990, the correlation coefficient -- which measures the statistical relationship between two or more random variables -- is 0.367 between program size and number of articles published,” reads the study. “In other words, some but not all of the effect we are seeing is due to the more elite schools also having larger programs, but only a little more than a third of this effect can be explained by program size alone.”

Over all then, “our study suggests that the concentration of power and prestige only intensifies as we move from hiring to publishing.”

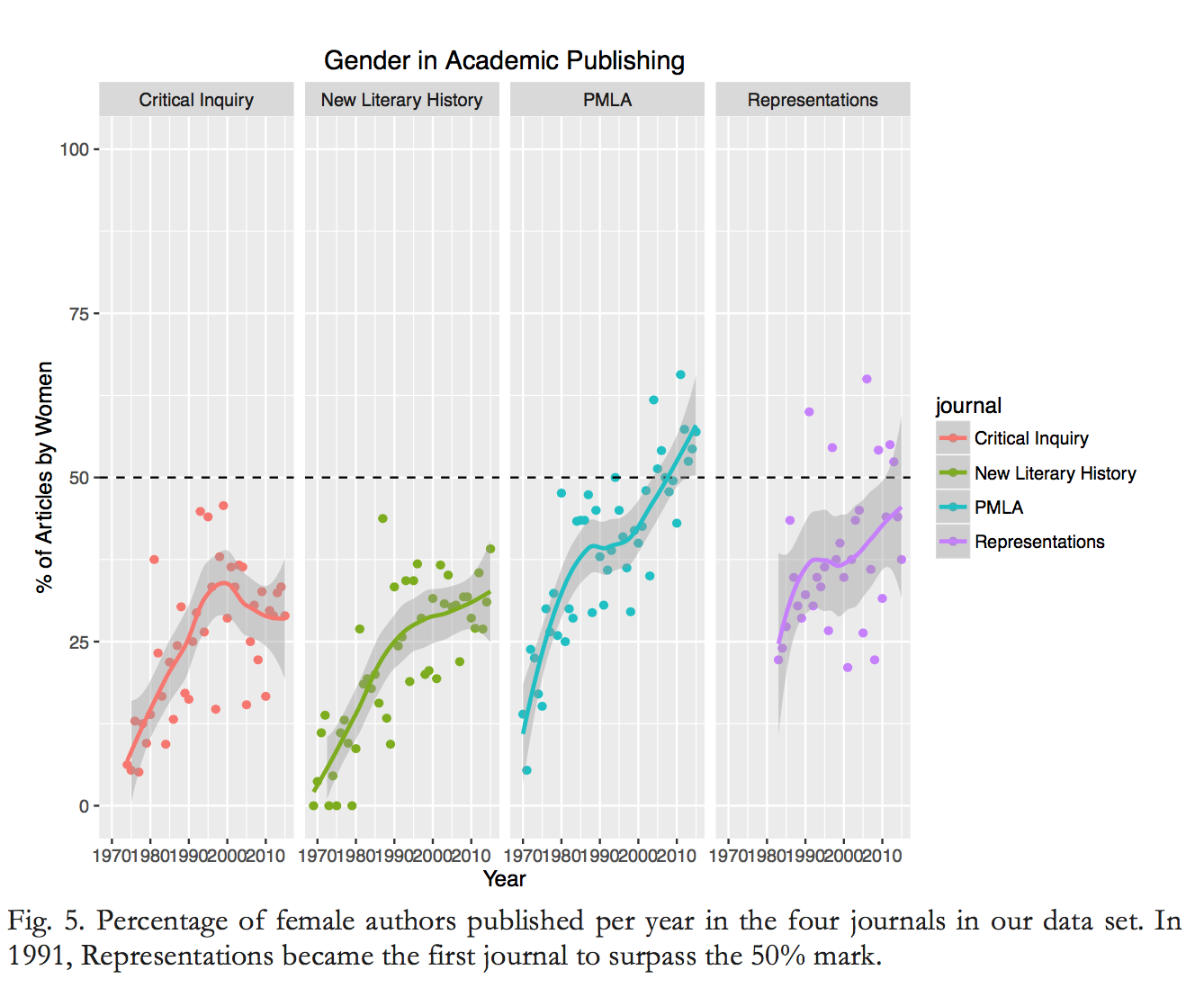

Beyond prestige bias, Piper and Wellmon also examined gender bias. They found that PMLA and Representations have made “strides” toward gender parity. Yet the other two journals have not shown a single year in which female authors have outnumbered men. And in a larger sample of 20 humanities journals taken over the past five years, they say, about three-quarters had average rates of authorship well below parity, even as the numbers of female graduate students in humanities programs has grown substantially in recent generations.

The paper asks but doesn’t explicitly answer whether intellectual equity in publishing is something to aspire to. What is clear, it says, is that elite institutions “continue to be the locus of the practices, techniques, virtues and values that have come to define modern academic knowledge. They diffuse it, whether in the form of academic labor (personnel) or ideas (publication), from a concentrated center to a broader periphery.”

Unclear, meanwhile, “is the relationship of this system to the quality and diversity of ideas, indeed to the ways in which the very ideas of quality and diversity might be imagined to intersect.”

So what is to be done?

Perhaps surprisingly for humanists -- many of whom oppose rankings systems as incompatible with the ineffability of scholarly value, at least beyond traditional peer review -- Piper and Wellmon argue that “the answer is neither a return to ideals of incalculability nor a belief in the power of free knowledge. … What we need in our view is not less quantification, but more; not less mediation, but mediation of a different kind. It is not enough to demand intellectual diversity and assume its benefits. We need new ways of measuring, nurturing, valuing and, ultimately, conceiving of it.”

Today, they say, “we have new tools at our disposal that can allow us to develop alternative ways of measuring importance beyond simply counting titles and citation numbers. Major strides have been made in the fields of content analysis and cultural analytics that can allow us to retool our measurements of impact to account for values like diversity and novelty rather than just power and prestige. It is time we used them.”

Brad Fenwick, vice president of global and academic research relations for Elsevier, a major publisher of academic journals, criticized aspects of the paper’s methodology, including that “data that I see could be explained by differences in the raw number and quality of submitted manuscripts between institutional types,” or even data on secondary submissions in another journal.

Over all, he said, “There is a degree of bias in about everything. Whether the level, once documented, is sufficient to be a problem that requires a remedy is in the eye of the beholder and needs to be measured against the cost and consequences of the remedy.”

Both Piper and Wellmon rejected Fenwick’s criticism, saying potential explanations don’t invalidate the concentration of elite institutions in elite journals. And conflating “raw numbers” and “quality” is precisely what they don’t want to do, they said, since “quality” is so often used to justify the elite concentration.

They also noted that they partially address the raw-numbers question through their consideration of program size. As for studying selection bias by tracking all submissions, they said they’d jump on the opportunity -- were such data available.

Ali Sina Önder, a lecturer at the University of Bayreuth in Germany and an affiliated researcher at Sweden’s Uppsala Center for Fiscal Studies, co-wrote a 2014 paper suggesting that while academic hiring favors prestigious departments, all but the top performers in each program tend to be mediocre researchers down the line.

He said the new paper was valuable in that it demonstrates the power of the Pareto principle -- a belief that the top 20 percent of researchers publish 80 percent of the research -- is alive and well in the humanities.

However, Önder said, “the authors miss the essential point by saying that the elite universities publish [disproportionately] more in top journals. If we agree that elite universities are able to hire top researchers, then it shouldn’t be a surprise that we observe the 80 percent/20 percent divide at the university level, as well.”

The authors find less heterogeneity when they look at Ph.D. institutions instead of affiliation, he continued, “but again, that shouldn’t be a big surprise, either. We know that top graduates of middle-ranked universities outperform middle-ranked graduates of top universities, and this is obviously reflected in where they get hired.”

So, Önder said,“I don’t think one can really talk about a bias or inequality in terms of publications just by looking at these observations. There may be a bias towards elite universities, or elite universities may be publishing much better because they hire much better researchers. The U.S. is winning most medals in the Olympics, but this doesn’t mean that referees are biased towards U.S., nor does it mean it is unfair in any way.”

Many a skeptic might ask whether research in top journals is coming overwhelmingly from the most prestigious institutions because they graduate and employ the best scholars. Wellmon said via email that his findings show “such a disproportionate influence of Ph.D. institutions -- Yale and Harvard, for example -- that we didn’t find such an argument compelling. The hierarchies were so clear that we think other elements are at play, such as patronage networks and prestige.”

Wellmon said it "would be naive to assume that Harvard, Yale, Berkeley or Columbia are that much better at filtering out the best knowledge by dint of better knowledge, vastly better practices and training. For us, it seems more likely that these institutions have long set the norms and values governing the creation and communication of scholarly knowledge. They help decide what counts as ‘the best’ research. They may well do that and do it well, but not so exclusively as our findings suggest is the case.”